The default maximum depth is 5. 03042015 This is sometimes referred to as recursive downloading.

Use Wget Command To Download Files From Https Domains Nixcraft

Use Wget Command To Download Files From Https Domains Nixcraft

Outputs file to directory mydir.

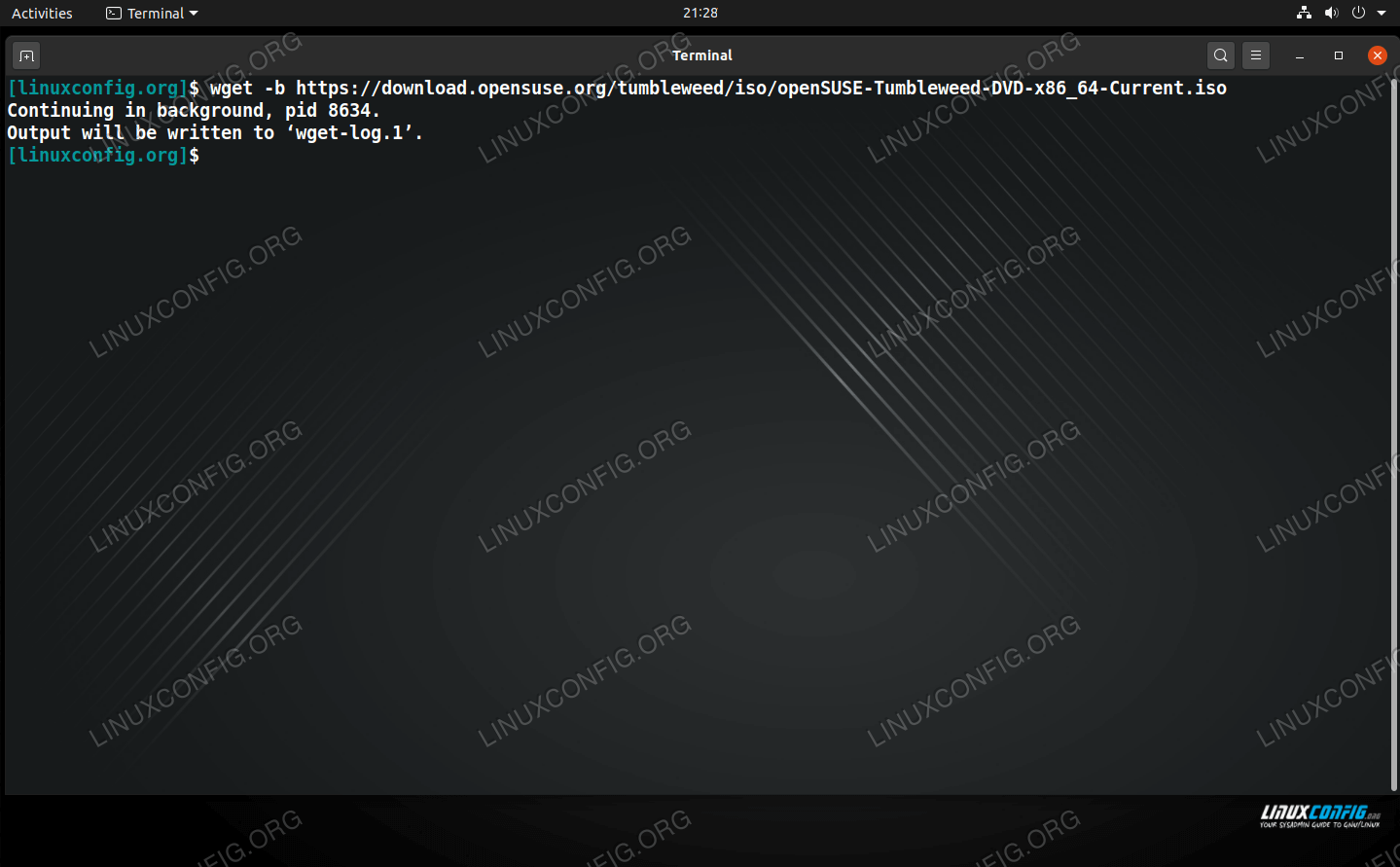

Android wget recursive. Download whole or parts of websites with ease. Recursive downloading also works with FTP where Wget can retrieves a hierarchy of directories and files. 16102019 This is cumbersome so if we want to use Wget from any directory we need to add an environment variable.

Wget resume a failed download. In httpfoobar Wget will consider bar to be a directory while in. While doing that Wget respects the Robot Exclusion Standard robotstxt.

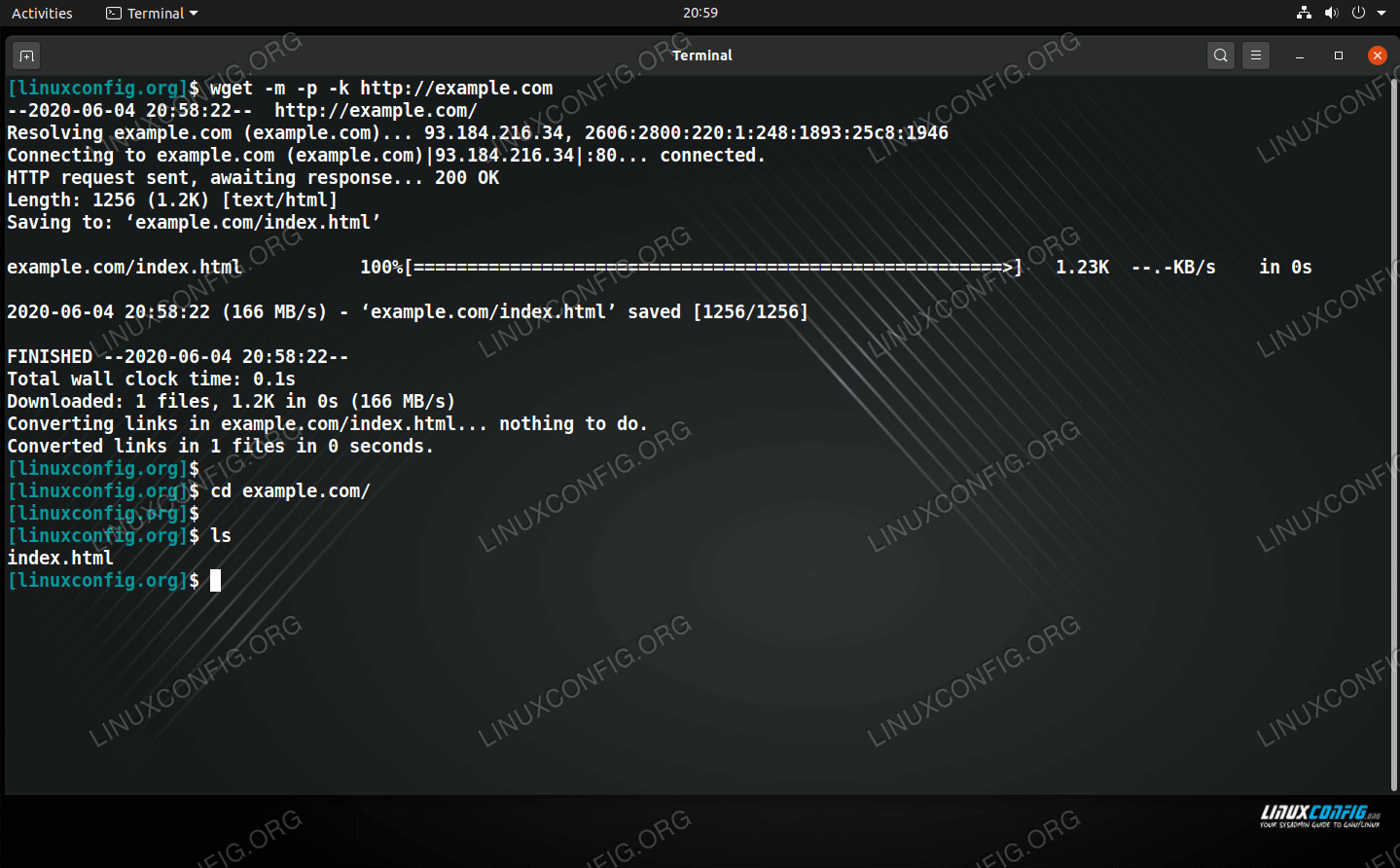

Wget can be instructed to convert the links in downloaded HTML files to the local files for offline viewing. 10012016 Then a shorter version of your command without the recursion and levels in order to do a quick one page test. -O tmpoutput It is however possible to exploit the issue with mirroringrecursive options enabled such as -r or -m.

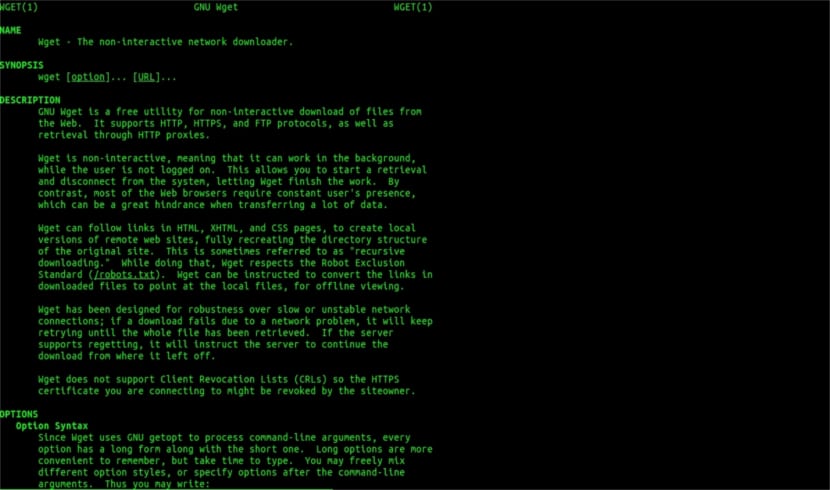

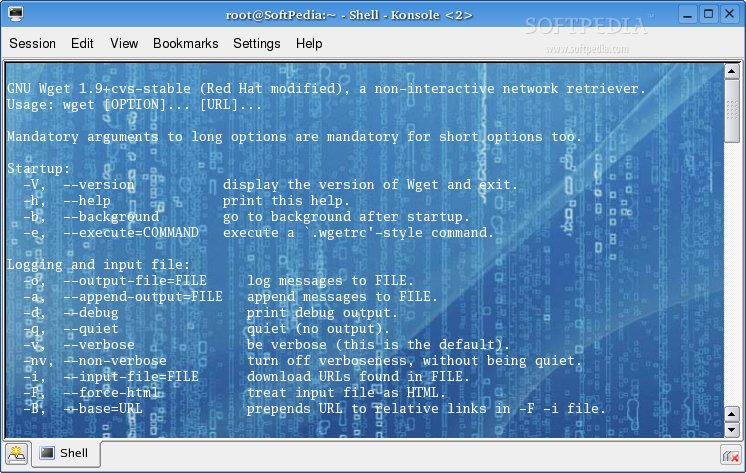

Another limitation is that attacker exploiting this vulnerability can only upload his malicious file to the current directory from which wget was run or to a directory specified by -P option directory_prefix option. It can be used with just a URL as an argument or many arguments if you need to fake the user-agent ignore robotstxt files rate limit it or otherwise tweak it. WGET is a free tool to crawl websites and download files via the command line.

Recursive downloading also works with FTP where Wget can retrieves a hierarchy of directories and files. Download a List of Files at Once. Ibrahimanfield mkdir dnld.

Theres a risk to doing recursive downloads of a site however so its sometimes best to use limits to avoid grabbing the entire site. -c allows continuation of download if it gets interrupted -x 10 and -s 10 allow up to 10 connections per server and -d mydir. Wget can be instructed to convert the links in downloaded HTML files to the local files for offline viewing.

While doing that Wget respects the Robot Exclusion Standard robotstxt. The option --domains will somewhat contrary to intuition only work together with -H. Turn on recursive retrieving.

While doing that Wget respects the Robot Exclusion Standard robotstxt. One alternative method is to use the wget command. Wget is a nice tool for downloading resources from the internet.

Wget ported to Android. Wget can be instructed to convert the links in downloaded HTML files to the local files for offline viewing. You can also include --noparent if you want to avoid downloading folders and files above the current level.

It can be used to fetch images web pages or entire websites. 07112012 The basic command I use to get max bandwidth is. 02052014 Sometimes you want to create an offline copy of a site that you can take and view even without internet access.

This is mentioned in. It provides recursive downloads which means that Wget downloads the requested document then the documents linked from that document and then the next etc. 26082009 This is sometimes referred to as recursive downloading.

20122017 Animated gif 01. 21112013 wget --recursive --level10 --convert-links -H --domainswwwbtlregionru btlregionru --span-hosts allows wget to follow links pointing to other domains and --domains restricts this to only follow links to the listed domains to avoid downloading the internet. Recursive downloading also works with FTP where Wget can retrieves a hierarchy of directories and files.

Enter in Advanced system settings. With this in mind please follow this path. If there is a file named ubuntu-510-install-i386iso in the current directory Wget will assume that it is the first portion of the remote file and will ask the server to continue the retrieval from an offset.

The r in this case tells wget you want a recursive download. So if for some reason there happen to be links deeper than 5 to meet your original wish to capture all URLs you might want to use the -l option such as -l 6 to go six deep-l depth--leveldepth. -l2 means 2 levels max.

Specify recursion maximum depth level depth. If you cant find an entire folder of the downloads you want wget can still help. Control PanelSystem and SecuritySystemAdvanced system settings.

06062009 Recursive FTP Download. 25092013 HTTP has no concept of a directoryWget relies on you to indicate whats a directory and what isnt. Aria2c --file-allocationnone -c -x 10 -s 10 -d mydir.

03072019 This will work it will copy the website locally. On the next screen choose Environment Variables. Using wget you can make such copy easily.

Contribute to aes512wget-android development by creating an account on GitHub. Make sure your run wget command in the same directory where the first download started. It lets you download files from the internet via FTP HTTP or HTTPS web pages pdf xml sitemaps etc.

15062013 This is sometimes referred to as recursive downloading.

Wget Some Examples Of What Can Be Done With This Tool Ubunlog

Wget Some Examples Of What Can Be Done With This Tool Ubunlog

Panduan Pemula Untuk Menggunakan Wget Di Windows Geekmarkt Com

Panduan Pemula Untuk Menggunakan Wget Di Windows Geekmarkt Com

Wget Some Examples Of What Can Be Done With This Tool Ubunlog

Wget Some Examples Of What Can Be Done With This Tool Ubunlog

How To Install Wget On A Debian Or Ubuntu Linux Nixcraft

How To Install Wget On A Debian Or Ubuntu Linux Nixcraft

Contoh Penggunaan Wget Command

Contoh Penggunaan Wget Command

Wget File Download On Linux Linuxconfig Org

Wget File Download On Linux Linuxconfig Org

Wget File Download On Linux Linuxconfig Org

Wget File Download On Linux Linuxconfig Org

Backup Site Recursively From Ftp With Wget Backup And Recovery Backup Site Web Design

Backup Site Recursively From Ftp With Wget Backup And Recovery Backup Site Web Design

Wget How To Download Website Offline With Authenticated Username And Password Ask Ubuntu

Wget How To Download Website Offline With Authenticated Username And Password Ask Ubuntu

Contoh Penggunaan Wget Command Windows Linux Niagahoster

Contoh Penggunaan Wget Command Windows Linux Niagahoster

Linux Wget How Linux Wget Command Works Programming Examples

Linux Wget How Linux Wget Command Works Programming Examples

Download Gnu Wget Linux 1 20 3

Download Gnu Wget Linux 1 20 3

Contoh Penggunaan Wget Command Windows Linux Niagahoster

Contoh Penggunaan Wget Command Windows Linux Niagahoster

Cara Clone Website Menggunakan Wget Command

Cara Clone Website Menggunakan Wget Command

Contoh Penggunaan Wget Command Windows Linux Niagahoster

Contoh Penggunaan Wget Command Windows Linux Niagahoster

How To Use Wget To Download All Files In A Directory Programmer Sought

How To Use Wget To Download All Files In A Directory Programmer Sought

Github Pelya Wget Android Wget Ported To Android

Wget File Download On Linux Linuxconfig Org

Wget File Download On Linux Linuxconfig Org

Learn Basic Linux Commands With This Downloadable Cheat Sheet Linux Linux Operating System Cheat Sheets

Learn Basic Linux Commands With This Downloadable Cheat Sheet Linux Linux Operating System Cheat Sheets

0 comments